It was really hot last weekend, and both my son and I ended up staying home. To kill the time, I ended up playing a small game called “Zuma Deluxe” with him. It’s probably a very old game, but still quite fun to play with. While playing, as an software developer, I was thinking how would i do the design if i were asked to make such game. One thing would be how to store the level design data in the file. So that question eventually led to this blog.

First thing I tried is google. Searching keywords “Zuma level file format” guided me to this one: http://spherematchers.proboards.com/thread/62/mod-zuma-deluxe?page=2. People seems to be discussing the level description file (which is an xml file) and other image files, but the most important “Curve” file is not addressed and remains secret.

So I thought about this: If i were told to design the game, a simple way is to store the track using multiple segments of straight lines. So you end up with a list of (x,y) pairs, each one is a point on the curve. Connecting all the points up with lines, you get the curve!

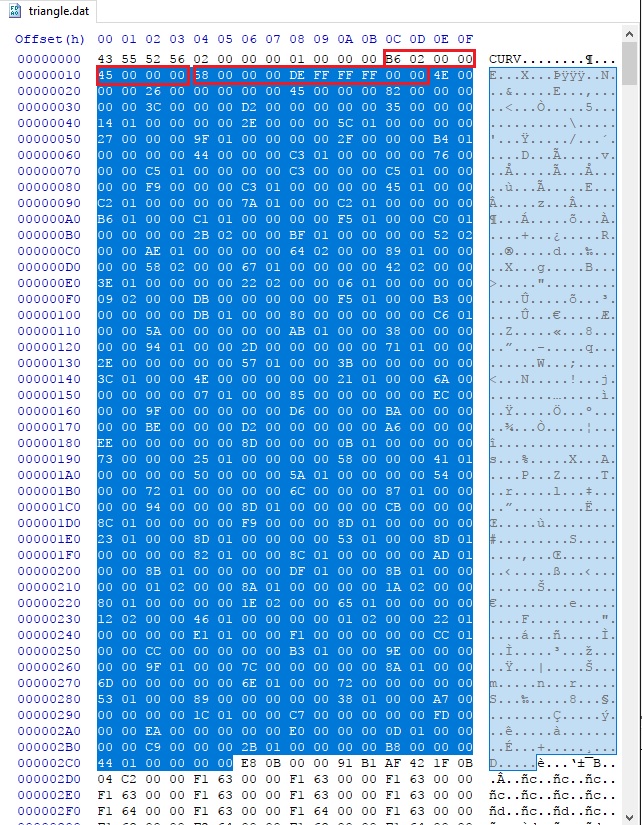

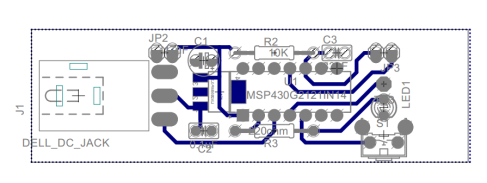

So is that how the game developers actually do? Let’s take a look. A quick dive into the game installation folder shows there are data files under “levels” directory, for example, <zuma_root_dir>/levels/triangle/triangle.dat.

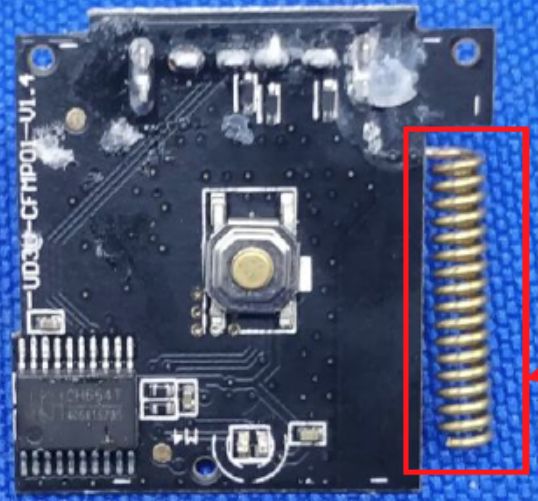

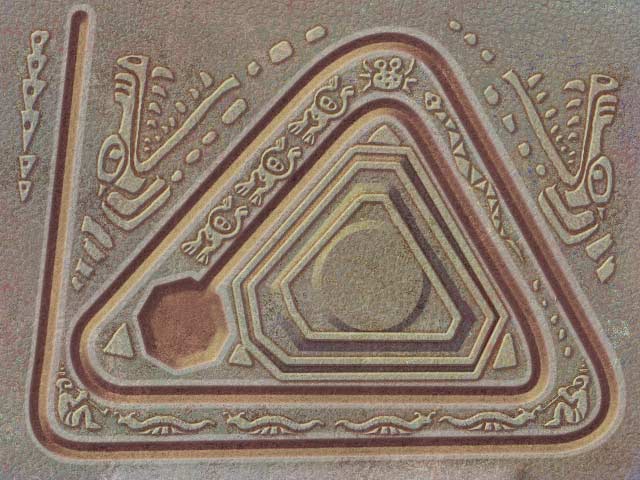

So let’s start with this “triangle” level. The background picture of this level is this:

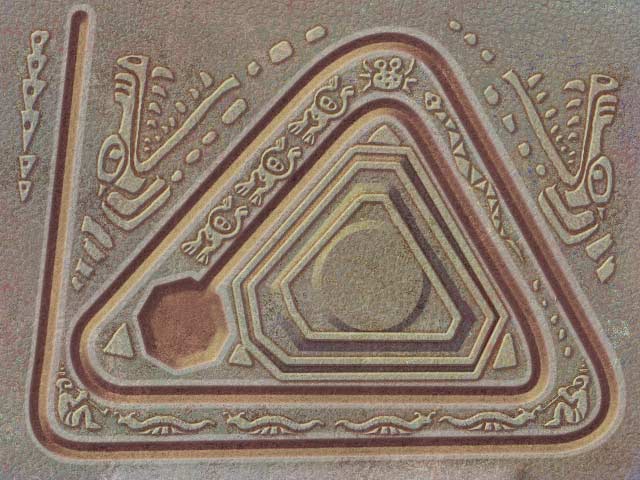

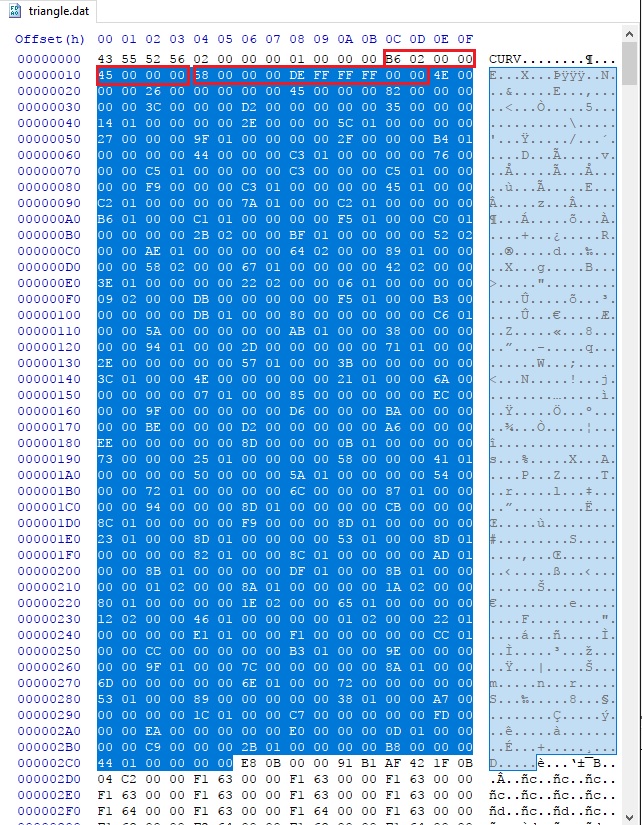

So i’m expecting the .dat file describing a curve matching the picture. Opening the file with a hex editor presents me with the following content:

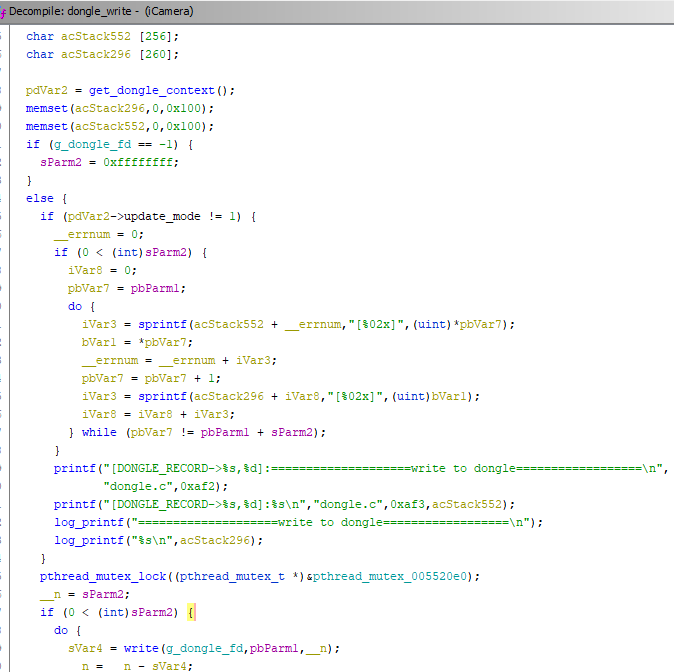

By simply eye balling on the hex digits, i can tell it has a header of 16 bytes (first row), which seems to be the following structure:

typedef struct

{

char signature[4];

uint32_t unk1, unk2;

uint32_t size;

} header_t;

the “size” field is the one i’m particularly interested in, as it outlines the first section of the level data. Looking into the binaries, and i realized, the data begins with a “count”, and then a group of element of length of 10 bytes. Let’s say it’s a structure of the following:

type def struct

{

uint32_t x;

uint32_t y;

uint16_t unk;

} elem_t;

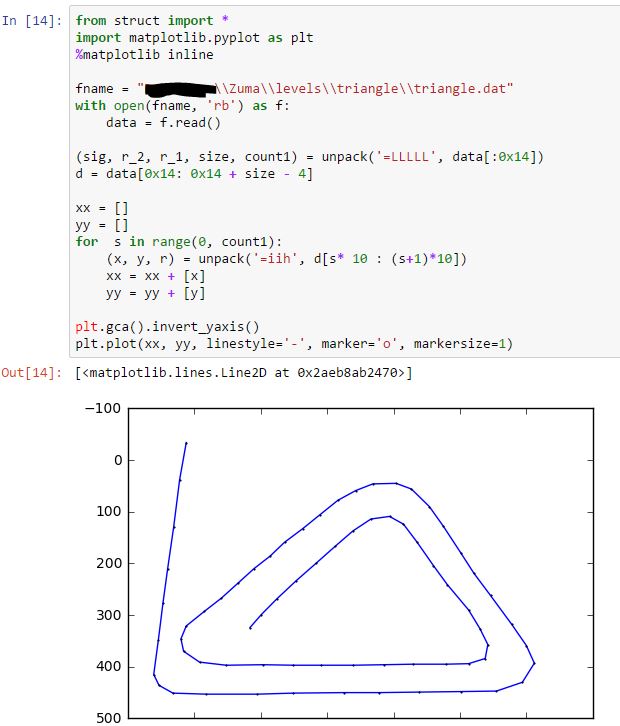

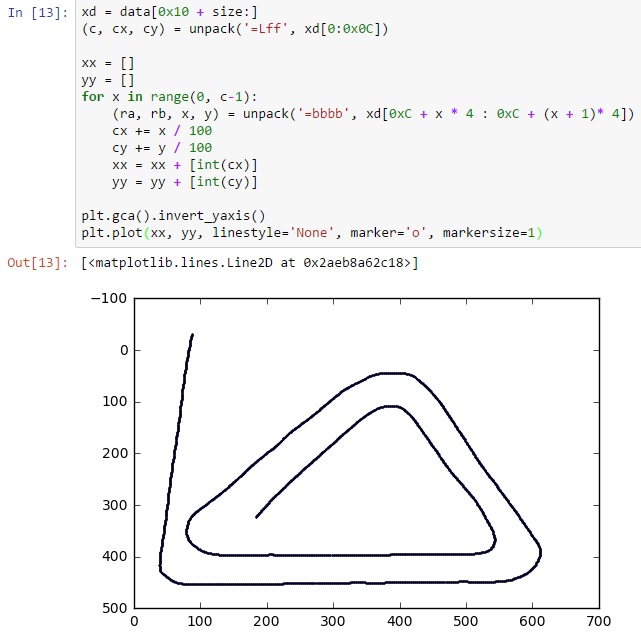

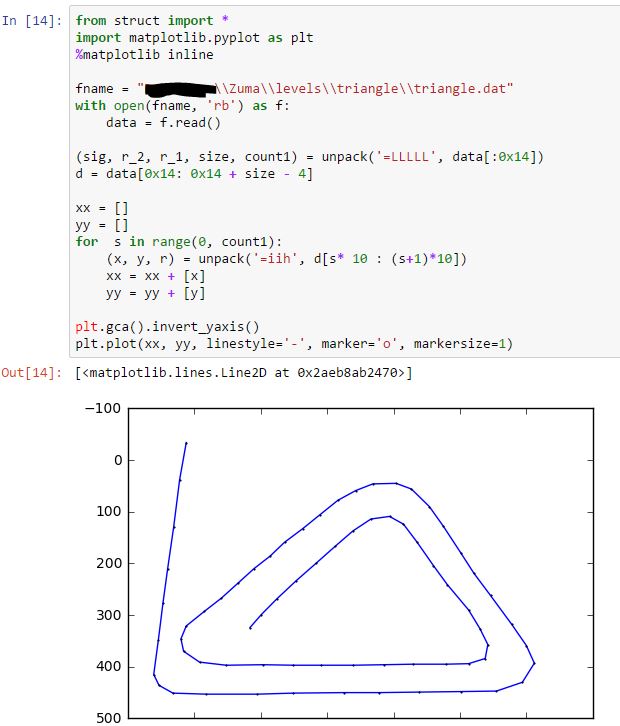

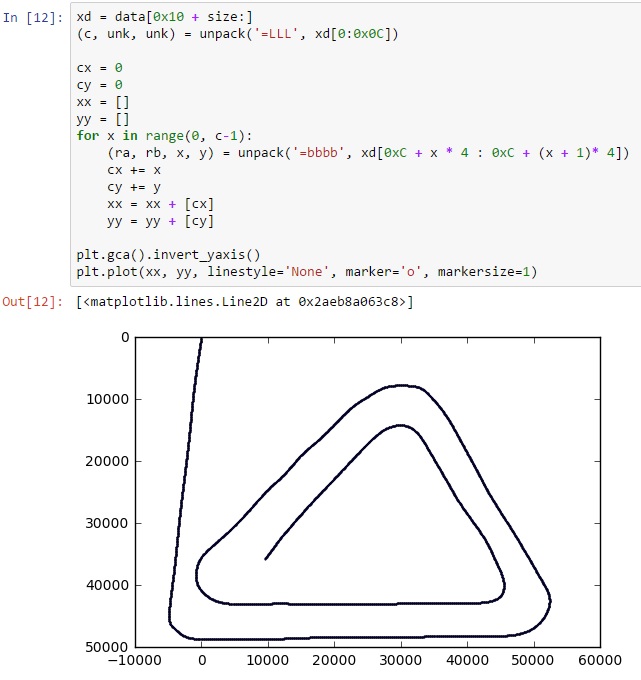

I don’t know if i’m guessing it correctly, so let’s try plotting the data out. I’m using python notebook for this task:

Bingo! It matches what I was guessing! When i was doing this, the first element was confusing me for quite a while as it as a negative “y” coordinate. So i skipped the first element. Later I tried to explain to myself with the first element, and found it makes sense, as the curve starts out side of the screen. So, mystery solved! Well, not completely, as there is a unknown “short” field.

Anyway, i was eager to try this out, so I altered a couple points, and tried to load the level in game, expecting something strange will happen. But nothing! The game ran as usual if it were not changed.

So what’s going on? I realized that I only had first section of the level file decoded, not entire file. What’s left?

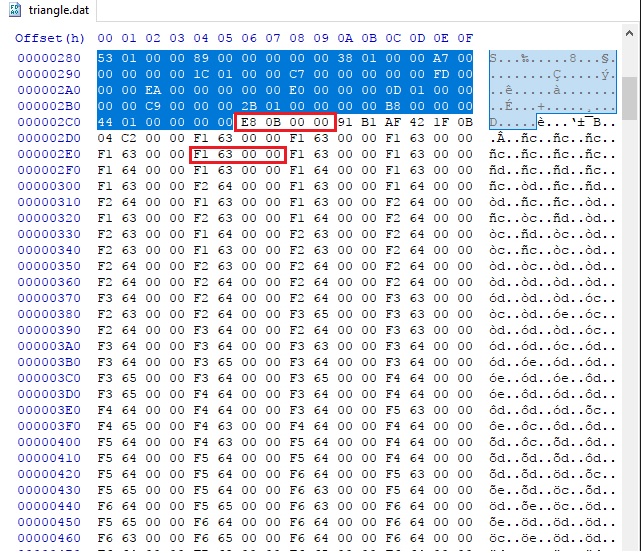

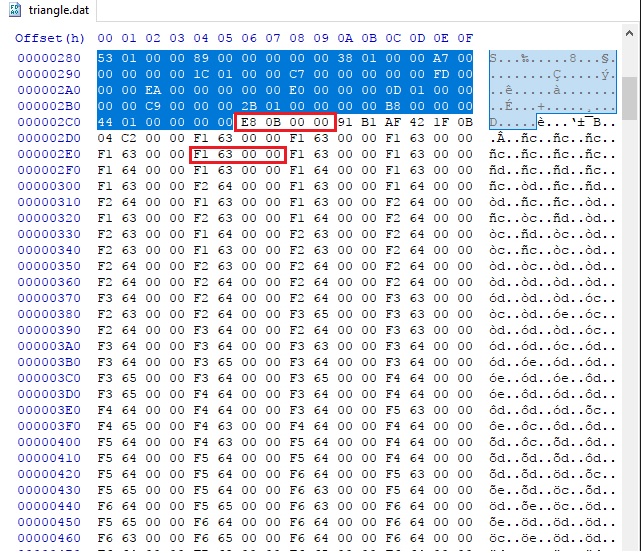

So here is the second section. Following the logic of the first section, the first 4 bytes look like another “size” field, but doesn’t quite match.

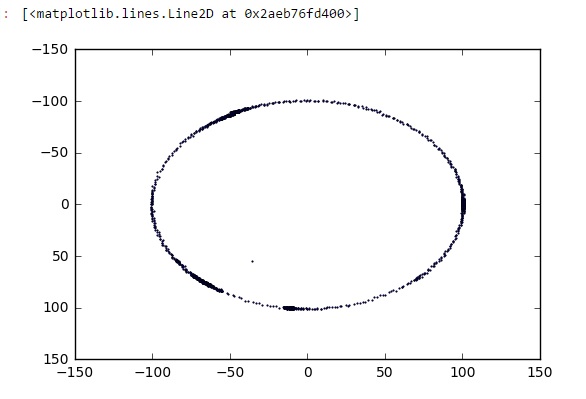

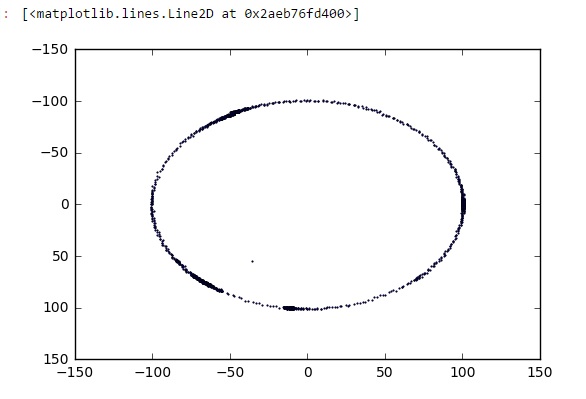

Anyway, let’s continue. After a couple strange numbers, the rest all the way to the end of file is something like a list of integers, with a slowly changing values. First i tried to plot them as (x, y) pairs, but that didn’t come up very well. I don’t know what i was thinking, but i somehow, plotted the first two bytes of each 4-byte group, as “x,y” pairs, and it looks like this:

Yes! This is definitely something! But how can a circle of dots relate to a curved track? Anyway, i started messing around with those data, and found:

- As long as being consistent with the header, changing the 1st section doesn’t make any visual differences. I even tried replace the entire section to a zero sized stub, the game still runs very well.

- Replacing the second section with that of other level file makes the game changes completely. It’s like i’m using this level’s background picture, but with the other level’s track.

These findings make me really thinking that the first section is not used at all. Then, how does the second section define the curve?

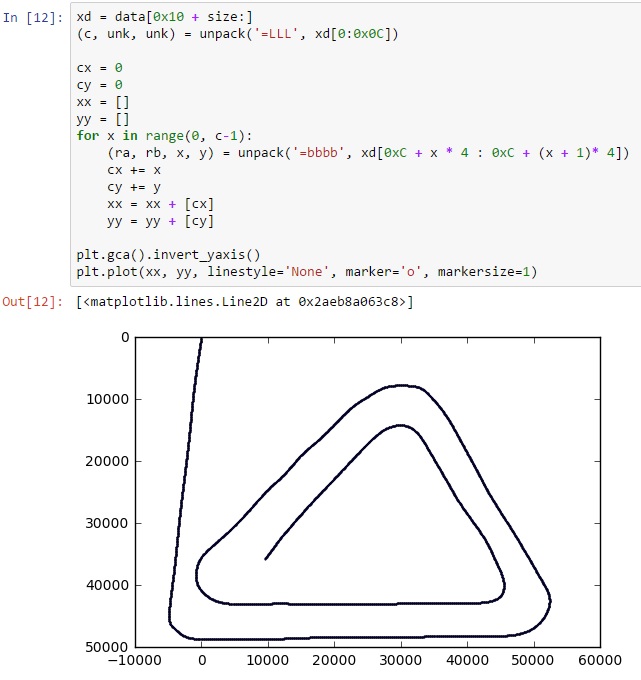

I was scratching my head for more than an hour while my son kept bugging me with all sorts of weird things. Then suddenly, i got the point! It can be an array of “delta_x, delta_y” for the points on the track!

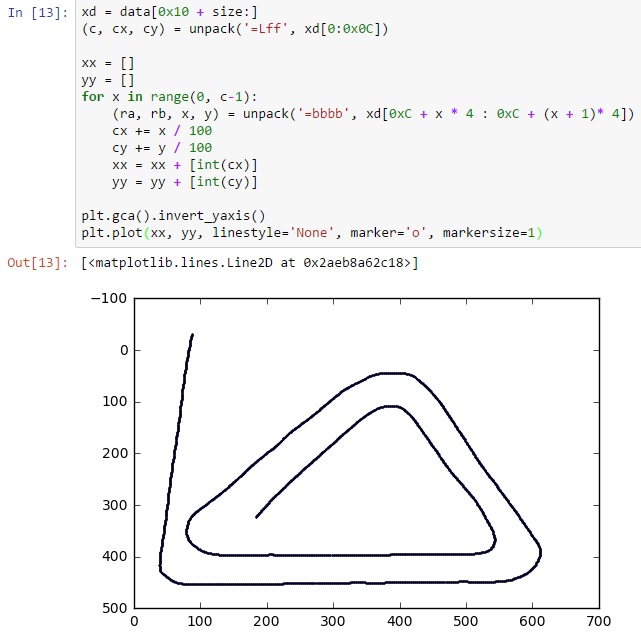

To verify that, i did the following plotting. Again, the first couple bytes are strange so I skipped them:

Fantastic! This proves my theory! Now all i need is to figure out two things:

- right scale, apparently the image i got is in very wrong scale

- origin, i’m expecting something between 640×480, and the first point should be something consistent as the value defined in first section

The first one is easy, looking at the current range, i think all i need is to divide the cx, cy by 100. The second one took me a couple seconds, by looking into the strange bytes at the beginning of the section:

91 B1 AF 42 1F 0B 04 C2

Again, if i were to design this game and storing all the deltas, the first point should be somewhere. As we mentioned section 1 is not used at all, so this must be in section two, which leaves only these bytes. But their value doesn’t look like integers at all!

I almost instantly know what i should be looking into: floating points! If i’m storing the deltas by a scale of 100, i probably want to divide them by 100. Storing the coordinates using 100 times of the actual coordinates on screen would be a good idea.

So that makes the following complete code:

Mission accomplished!

So what’s next? Maybe a custom Zuma level designer?